“Game Walkthroughs and Web Archiving” was awarded a grant in the 2021-2022 round of the Discretionary Funding Programme (DFP), the aim of which is to support the collaborative activities of the IIPC members by providing funding to accelerate the preservation and accessibility of the web. The project lead is Michael L. Nelson from the Department of Computer Science at Old Dominion University. Los Alamos National Laboratory Research Library is a project partner. You can learn more about this DFP-funded project at our Dec. 14th IIPC RSS Webinar: Game Walkthroughs and Web Archiving, where Travis Reid will be presenting on his research in greater detail.

By Travis Reid, Ph.D. student at Old Dominion University (ODU), Michael L. Nelson, Professor in the Computer Science Department at ODU, and Michele C. Weigle, Professor in the Computer Science Department at ODU

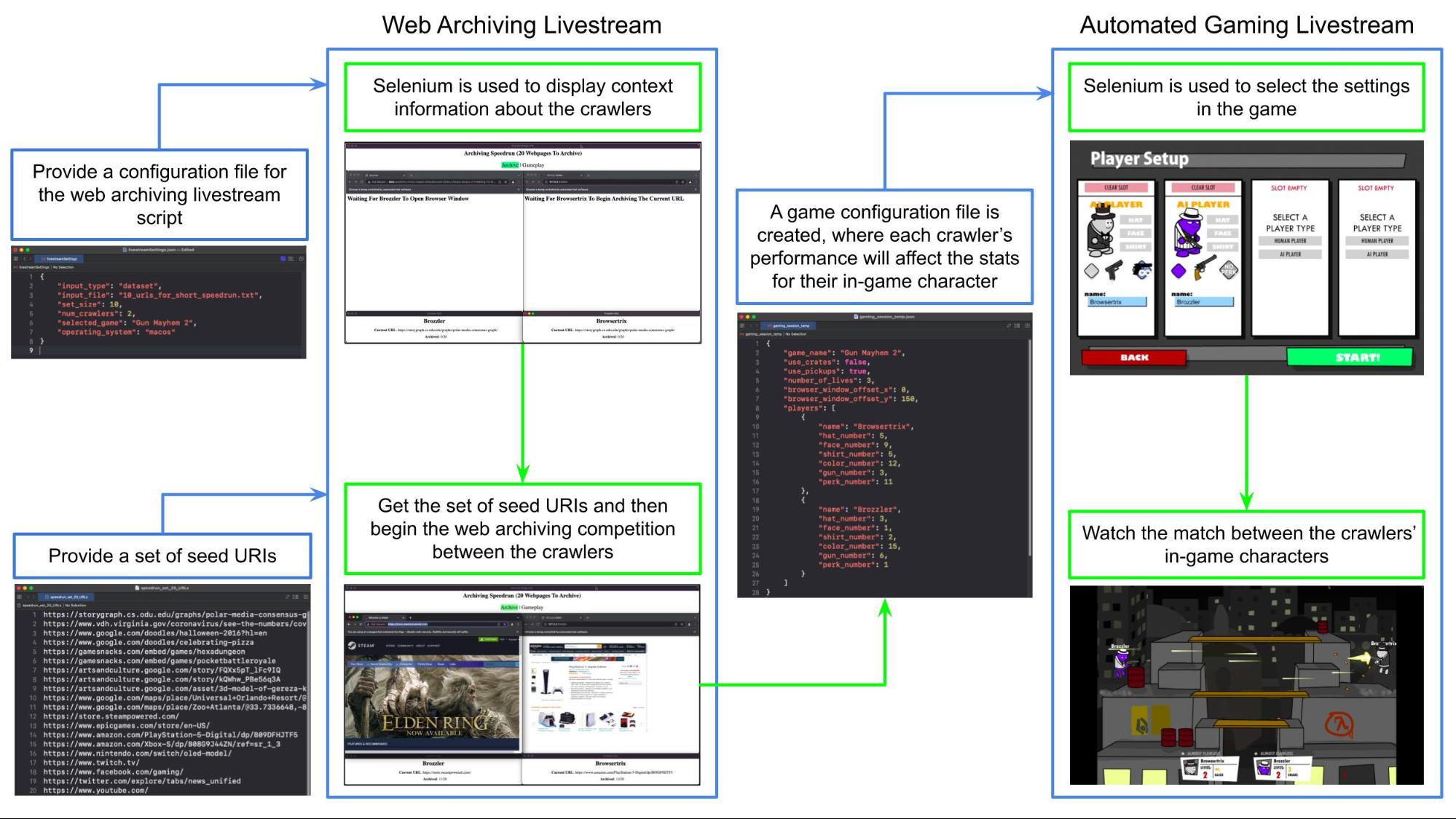

The Game Walkthroughs and Web Archiving project focuses on integrating video games with web archiving and applying gaming concepts like speedruns to the web archiving process. We have made some recent updates for this project, which involve adding a replay mode, results mode, and making it possible to have a web archiving tournament during a livestream.

Replay Mode

Replay mode (Figure 1) is used to show the web pages that were archived during the web archiving livestream and to compare the archived web pages to the live web page. During replay mode, the live web page is shown beside the archived web pages associated with each crawler. The web archiving livestream script scrolls the live web page and archived web pages so that viewers can see the differences between the live web page and the recently archived web pages. In the future when the web archiving livestream supports the use of WARC files from a crawl that was not performed recently, we will compare the archived web pages from the WARC file with a memento from a web archive like Wayback Machine or Arquivo.pt instead of comparing the archived web page against the live web page. For replay mode, we are currently using Webrecorder’s ReplayWeb.page.

Replay mode will have an option for viewing annotations created by the web archiving livestream script for the missing resources that were detected (Figure 2). The annotation option was created so that the web archiving livestream would be more like a human driven livestream where the streamer would mention potential reasons why a certain embedded resource is not being replayed properly during the livestream. Another reason for creating the annotation option is so that replay mode can show more than just web pages being scrolled and can provide some information about the elements on a web page that are associated with missing embedded resources. There will also be an option for printing an output file that contains the annotation information created by the web archiving livestream script for the missing embedded resources. For each detected missing resource, this file will include the URI-R for the missing resource, the HTTP response status code, the element that is associated with the resource, and the HTML attribute where the resource’s URI-R is extracted from.

Results Mode

We have added a results mode (Figure 3) to the web archiving livestream so that viewers can see a summary of the web archiving and replay performance results. This mode is also used to compute the score for each crawler so that we can determine which crawler has won the current round based on the archiving and replay performance. The performance metrics used during results mode is retrieved from the performance result file that is generated after the web archiving and replay sessions. Currently this performance result file includes the number of web pages archived by the crawler during the competition (number of seed URIs), the speedrun completion time for the crawler, the number of resources in the CDXJ file with an HTTP response status code of 404, the number of archived resources categorized by the file type (e.g., HTML, image, video, audio, CSS, JavaScript, JSON, XML, PDF, and fonts), and the number of missing resources categorized by the file type. The performance metrics we are currently using for determining missing and archived resources are temporary and will be replaced with a replay performance metric calculated by the memento damage service. The temporary metrics associated with missing and archived resources are calculated by going through a CDXJ file and counting the number of resources with a 200 status code for the number of archived resources and counting the number of resources with a 404 status code for the number of missing resources. Results mode will allow the viewers to access the performance results file for the round by showing a link or QR code associated with a web page that can dynamically generate the performance results from the current round and allow the viewers to download the performance results file. The web page that is shared with the viewers will also have a button that can navigate them to the video timestamp URL associated with the start of the round, so that viewers who recently joined the livestream can go back and watch the archiving and replay sessions for the current round.

Web Archiving Tournaments

A concept that we recently applied to our web archiving livestreams is web archiving tournaments. A web archiving tournament is a competition between four or more crawlers. The web archiving tournaments are currently single elimination tournaments similar to the NFL, NCAA College Basketball, and MLS Cup playoffs, where a team is eliminated from the tournament if they lose a single game. Figure 4 shows an example of teams progressing through our tournament bracket. For each match in a web archiving tournament, there will be two crawlers competing against each other. Each crawler is given the same set of URIs to archive and the set of URIs will be different for each match. The viewers will be able to watch the web archiving and replay sessions for each match. After the replay session is finished, the viewers will see a summary of the web archiving and replay performance results and how the score for each crawler is computed. The crawler with the highest score will be the winner of the match and will progress further in the web archiving tournament. When a crawler loses a match it will not be able to compete in any future matches in the current tournament. The winner of the web archiving tournament will be the crawler that has won every match that it has participated in during the tournament. The web archiving tournament will be updated in the future to support other types of tournaments like double elimination tournament where teams can lose more than once, round robin tournament where teams play each other an equal amount of times, or a combination like the FIFA World Cup that uses round robin for the group stage and single elimination for the knockout phase.

Future work

We will apply more gaming concepts to our web archiving livestreams, like having tag-team matches and a single player mode. For a tag-team match, we would have multiple crawlers working together on the same team when archiving a set of URIs. For a single player mode, we could allow the streamer or viewers to select one crawler to use when playing a level.

We are accepting suggestions for video games that will be integrated with our web archiving livestreams and shown during our gaming livestreams. The game must have a mode where we can watch automated gameplay that uses bots (computer players) and there needs to be customization for the bots that can improve the skill level, stats, or abilities for the bot. Call of Duty: Vanguard is an example of a game that can be used during our gaming livestream. In a custom match for Call of Duty: Vanguard, the skill level for the bots can be changed individually for each bot and we can change the number of players added to each team (Figure 5). This game also has other team customization options (Figure 6) that are recommended for games used during our gaming livestream but are not required like being able to change the name of the team and choose the team colors. Call of Duty: Vanguard also has a spectator mode named CoDCaster (Figure 7) where we can watch a match between the bots.

An example of a game that will not be used during our gaming livestream is Rocket League. When creating a custom match in Rocket League it is not possible to make one bot have better stats or skills than the other bots in a match. The skill level for the bots in Rocket League is applied to all bots and cannot be individually set for each bot (Figure 8).

A single player game like Pac-Man also cannot be played during our automated gaming livestream, because a human player is needed in order to play the game (Figure 9). If there are any games that you would like to see during our gaming livestream where we can spectate the gameplay of computer players, then you can use this Google Form to suggest the game.

Summary

Our recent updates to the web archiving livestreams are adding a replay mode, results mode, and an option for having a web archiving tournament. Replay mode allows the viewers to watch the replay of the web pages that were archived during the web archiving livestream. Results mode shows a summary of the web archiving and replay performance results that were measured during the livestream and shows the match scores for the crawlers. The web archiving tournament option allows us to have a competition between four web archive crawlers and to determine which crawler performed the best during the livestream.

If you have any questions or feedback, you can email Travis Reid at treid003@odu.edu.

IIPC is excited to announce the launch of its

IIPC is excited to announce the launch of its